Towards zero touch operations in telco networks

Autonomic Observability

What would be like to manage complex telco network autonomously? Is that even possble?

No, not really. But that is why we provide autonomic methods for operating complex networks. Automation provides solution to repetitive simple tasks, without perception. Autonomous systems besides perception have cognition, so they really understand situations. Our telco solution is in between – relieves human force not only from simple repetitive tasks, but also from intelligent ones.

OPEN SOURCE COMPONENTS

End2end data pipelines

Whenever possible, we prefer open source components. Rsyslog, fluentd, Telegraf and Logstash are used for data collection and ingestion. Apache Kafka and Zookeeper as a message broker implementation. OpenSearch and Apache Druid as indexing and OLAP engines. Grafana, OpenDashboards and Superset as visualization tools. Apache Flink for ditributed data processing, while deep learning models are customly developed in the house based on Pytorch library.

LOGICAL BUILDING BLOCKS

Situational awareness with autonomic actions

There are many requirements which have to be fulfilled before the autonomic observability system can produce useful results. It encomopasses with data collection, transportation, processing systems for data analysis and anomaly detection, data stores with indexing and analytical capabilities, visualization tools. Combining al of them in a meaningful system requires some glue…

Data is ideally pushed from sources into Apache Kafka mesage bus in configured periods, from 1 to 5 minutes usually. The push approach removes neccessity to be aware of all deployed/running resources in order to collect from them, rather those components of resources which are running will simply produce their log/event/metric data. However, such practice is not engaged always (and we appreciate pull based retrieval as well) so an adapting mechanism had to be involved. Solution usually lies in usage of already existing components, such as fluentd, rsyslogd+omkafka, Logstash, Telegraf, NiFi, but also can be based on custom written adapter apps. We leverage both.

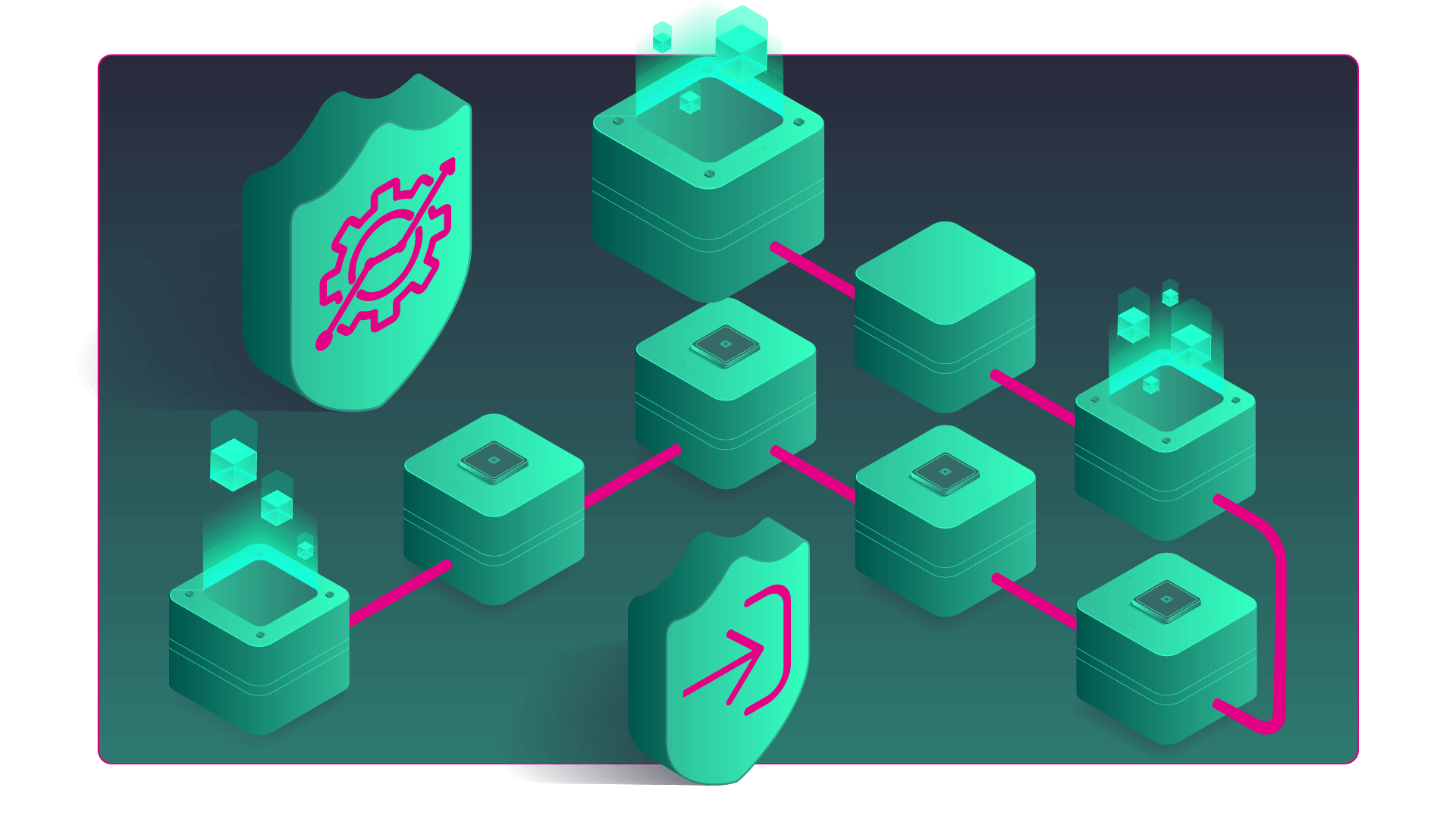

The main component of the sytem is set of statistic and deep learning models for anomaly detection from either metric data or semi-structured log/event/alarm data. Models operate on data provided via Apache Kafka message bus, while preprocessing mechanisms make sure to split and aggregate data prior to input into inference mechanism. Our DL models are either derived from state-of-the art models published in scientific papers, or completely our own approach. The result of every model is a json document describing the anomaly, providing the name of the class(es) which anomaly belongs to, etc.

In order to reduce amount of event-based information to the operating stuff, but as well to enable automatic reactions to detected scenarios, we introduced the concept of observations. The observation is a situation which takes place over the time. It can be of informational level, where no action is needed, or alerting level where the either manual or automated reaction is needed, such as reboot, redirect traffic, change DNS etnries, change the configuration, etc.

The events and alarms arising from the observed systems, i.e. via SNMP, various events and anomalies derived from metric data, anomalies derived from log data (both as sequence recognition and as a metric-derived-from-logs anomalies) are continously clustered into groups using several techniques in parallel. Examples of clustering techniques include simple keyword-based clustering, such as group all events/anomalies coming from the same host. Advanced approach is to use topology information to know which hosts and components are connected adn to group events based on that information. The most challenging way of consolidating events and anomalies is semantical approach, where we try to group together those ones with the same meaning, i.e. “connection lost” and “link down”.

To make things more exciting, an additional data input – network traffic packets is adding significantly more information, and significantly more complexity.

IMPROVEMENTS

Speeding up development

As always, building the infrastructure is very challenging task which pulls to focus of the project away from data science (as the main task). Therefore, it was decided to use Qubinets to allow fast infrastructure development. Even more, it was not quite clear which technologies/tools would make sense, so the Qubinets platofrm comes in very handy to make several try-outs with various components and find the optimal solution.

Data Infrastructure

Uncomprehensive speedup in the infrastracture modelling and deployment as well as data pipelines build-up.

Data Science

Major task was to recognize anomalies from log, alarm/event and metric data utilizing deep learning methods.

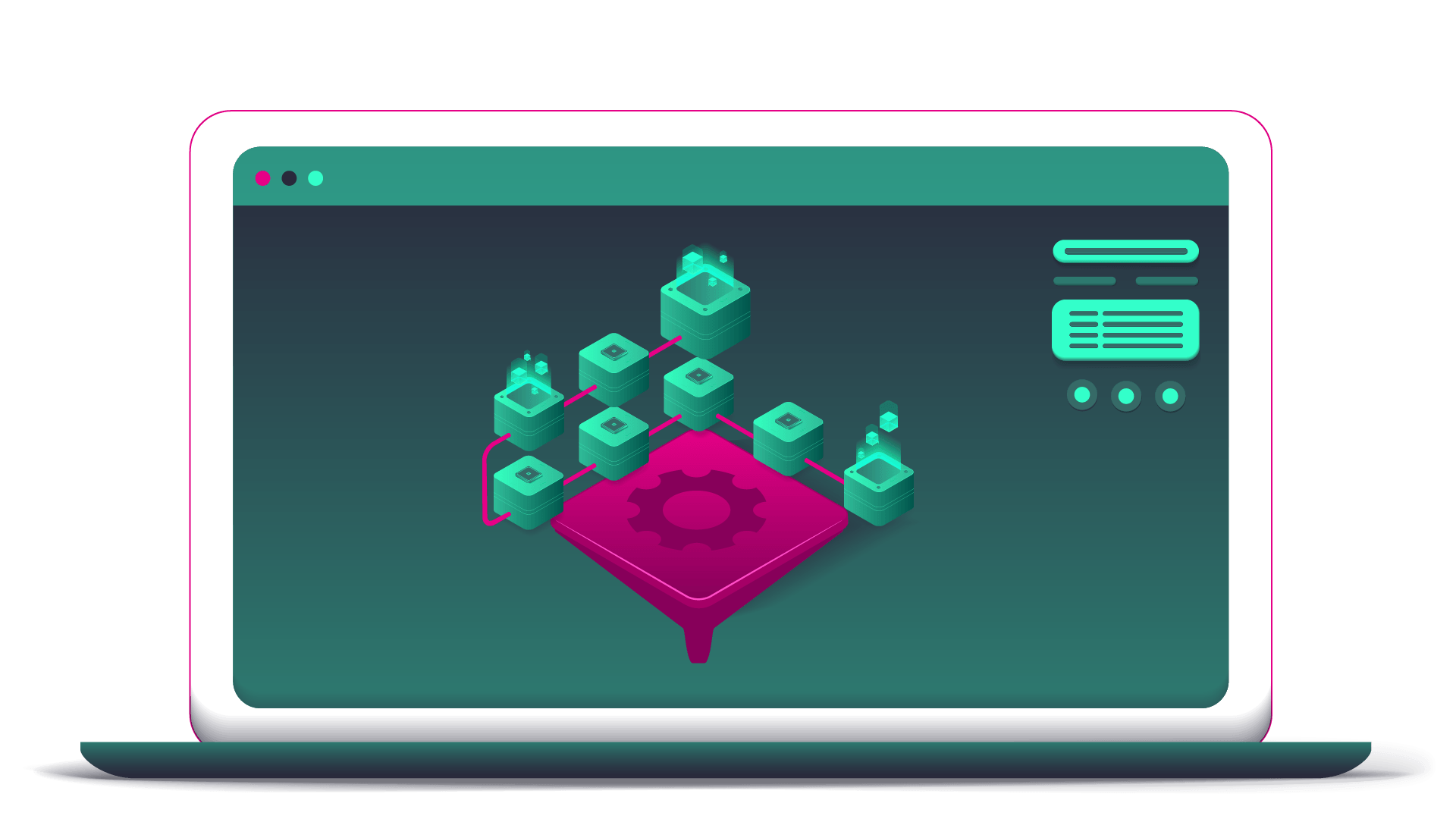

SYSTEM ARCHITECTURE

Real-time anomaly detection on data in motion

The solution is based on the Kappa architecture, because the goal was to provide anomaly detection and event correlation in (near-)real-time with up to few seconds of latency.