Managed and hosted

Qubinets for RabbitMQ®

RabbitMQ® is a messaging broker that gives your applications a common platform to send and receive messages, and your messages a safe place to live until received.

No Credit Card required. No aditional Fees. All features included.

Introduction

What, how, where

RabbitMQ® within Qubinets platform is perfectly utilized

Qubinets platform enables everyone, even if you have never seen a code in your life, to build and deploy RabbitMQ® in matter of minutes. Just few drag and drops and your RabbitMQ® is up and running.

Packaging of our Qubinets Platform and its components, like RabbitMQ®, is happening through Kubernetes.

Qubinets platform is managing your data infrastructure so that you can focus on your business.

Benefits

Benefits of Qubinets for RabbitMQ® as-a-service

With Qubinets hosted and managed-for-you RabbitMQ, you can set up clusters, deploy new nodes, migrate clouds, and upgrade existing versions — in a single mouse click — and monitor them through a simple dashboard. So you can get back to creating and implementing applications, without worrying about RabbitMQ’s complexity.

Automatic updates and upgrades. Zero stress.

Stressing about applying maintenance updates or version upgrades to your clusters? Do them both in a single click from your Qubinets dashboard. With no interruptions or downtime. Ever.

99.99% uptime. 100% human support.

Downtime is a disaster for critical applications. That’s why Qubinets makes sure you get 99.99% uptime. Plus, you get access to a 100% human support team — in case you need a helping hand.

Super-transparent pricing. No networking costs.

Qubinets for RabbitMQ comes with all-inclusive pricing. No hidden fees or charges, just one payment that covers networking to data storage, and everything in-between.

Scale up or scale down as you need.

Increase your storage, get more nodes, create new clusters or expand to new regions. RabbitMQ has never been this easy.

Use cases

How RabbitMQ® is used by Qubinets customers

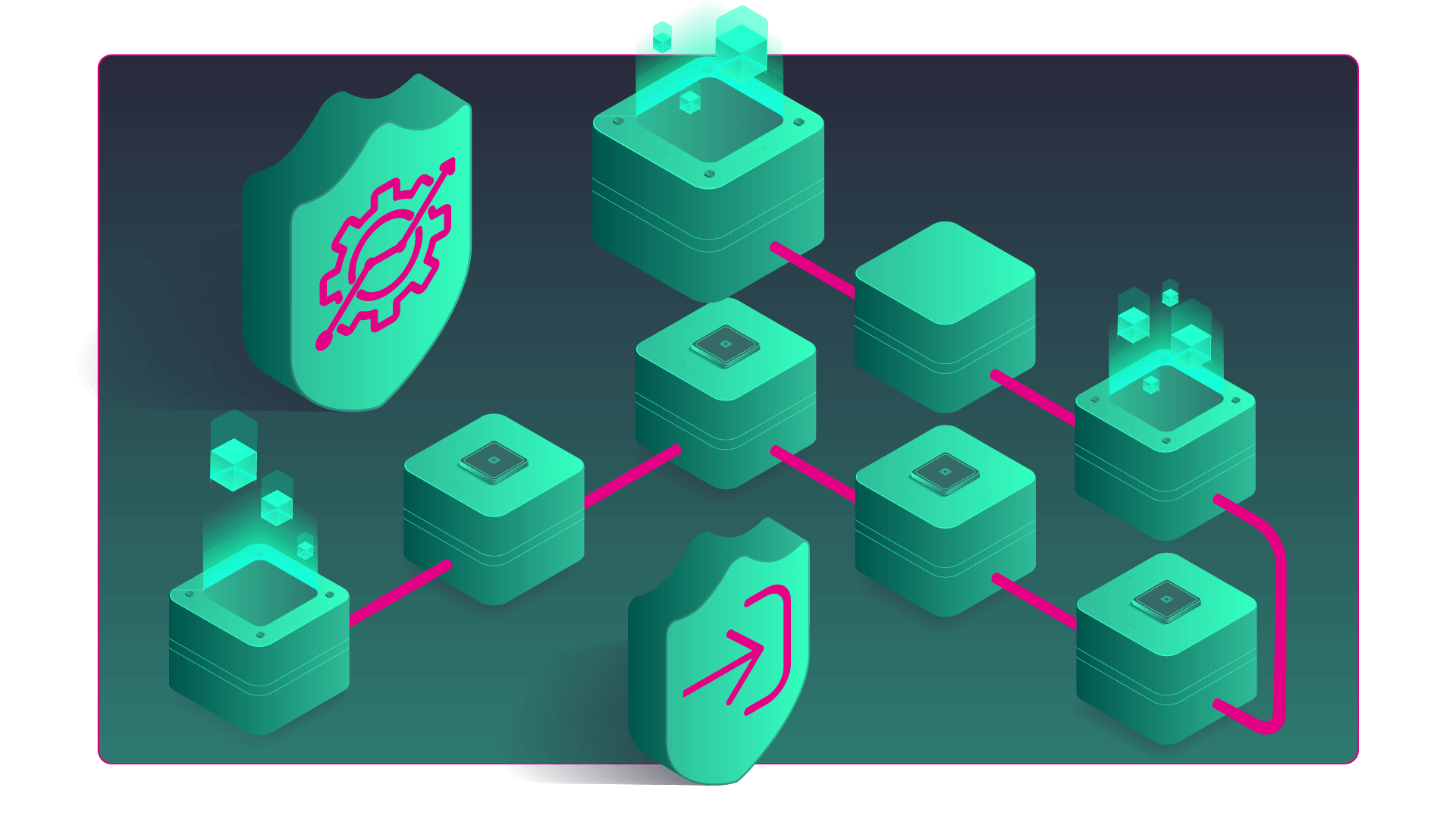

By providing RabbitMQ or similar applications which can analyze conformance of data to schemas, convert from one schema to another, perform minor changes, enrichments, lookup/mappings, etc. Depending on the concrete situation, usage of Logstash, fluentd, Apache NiFi or similar tools is not excluded.

Our data pipeline starts with GAC agent as an interface for external systems. REST based communication towards on-demand decoder is followed by several ML/DL models (depending on input data and goals) – over the RabbitMQ. Further components are used to write the metadata into PostgreSQL database, assemble results, expose additional data to RabbitMQ. This data is ingested into Apache Druid to stay available for deep insight during annotations, and also perform interactive analytics on the requests and responses (classifications).