Welcome back! Today we would like to talk about virtualization, which is at the core of everything we do here at Qubinets. Virtualization is a technology that has revolutionized the way we think about computing. It is now a vital aspect of modern IT infrastructure, providing organizations with greater flexibility, efficiency, and control over their computing resources. But what exactly is virtualization, how does it work, and how does it relate to containers, Docker, and Kubernetes platforms? Buckle up and join us on this journey to discover the power of virtualization!

Virtualization & Virtual machines

If you are a Windows user, you have likely used software like VMware or VirtualBox to create a virtual Linux prior to the availability of Windows Subsystem for Linux (WSL), which allowed you to run Linux applications natively on Windows. This provided access to fundamental and useful tools like Bash shell and Vim, as well as the ability to develop software using technologies and tools that are better suited for Linux or MacOS.

So, why choose a virtual machine over dual booting your PC? Well, the main benefit is the ability to use features from multiple operating systems simultaneously and switch between them seamlessly. Plus, if something goes wrong on one of your virtual machines, the others continue to run unaffected. The only downside is that you can never utilize all hardware resources on a single virtual machine, and the setup and maintenance of the system require some effort and learning. However, the benefits outweigh the drawbacks 🙂 .

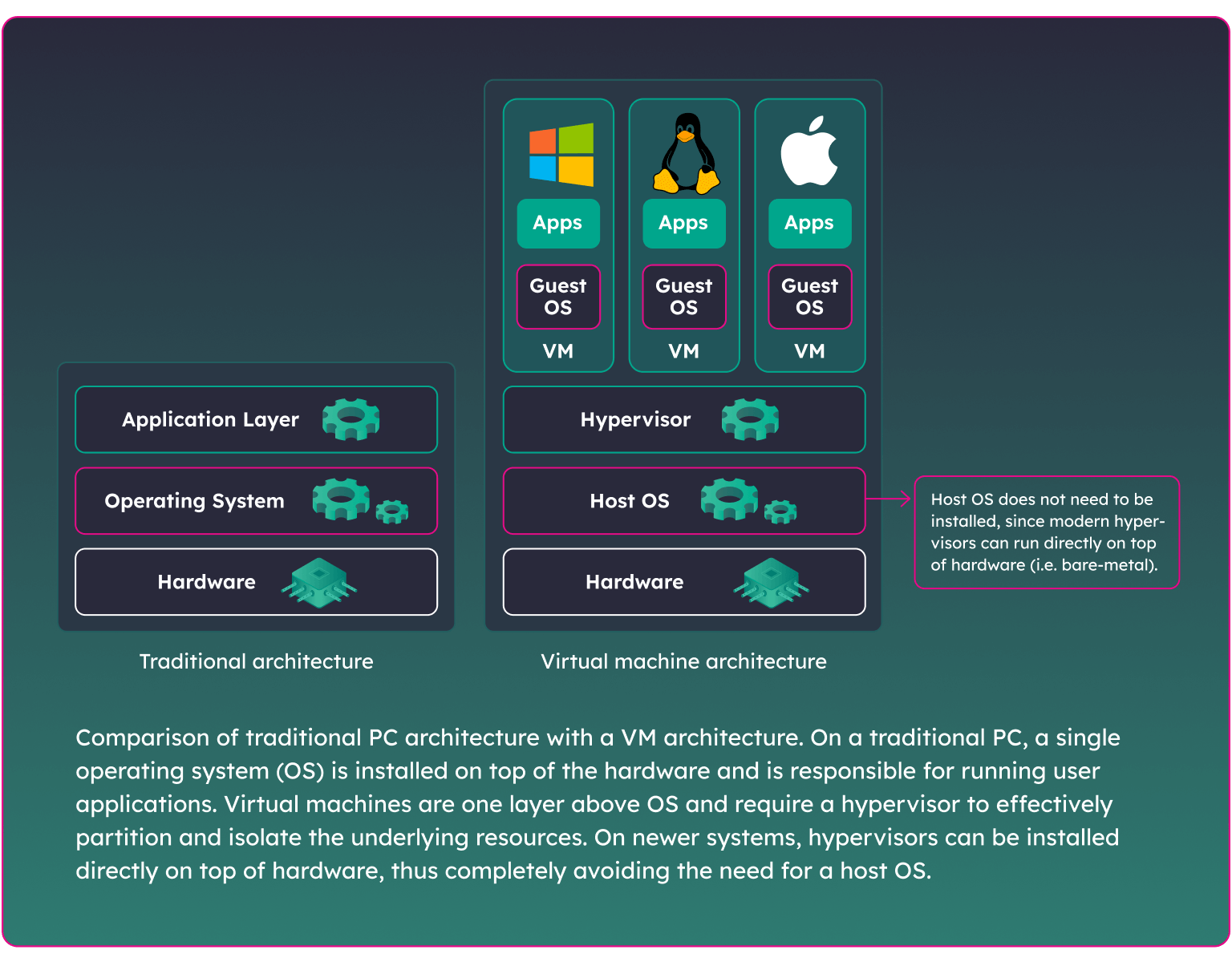

Okay, but how does it all work? The key idea is virtualizing the hardware. Basically, it means abstracting actual hardware into virtualized resources. To explain this concept better, let us take a look first at how a traditional computer setup works.

In a traditional computer setup, an operating system (OS) such as Windows or Linux Ubuntu is installed on top of the hardware. The OS manages the hardware and software resources, acting as a middleman between the computer’s hardware and the applications running on it, managing memory, allocating resources, scheduling tasks, and maintaining system security.

However, two operating systems cannot access the hardware directly at the same time, as it would result in conflicts such as overlapping network requests and memory overwriting, causing both systems to crash.

To overcome this issue, we need to virtualize hardware (i.e. create virtual machines) by introducing another layer of software – a hypervisor – which serves as an intermediate layer between the virtual machines and the actual hardware.

The hypervisor can run directly on bare metal or on top of a host operating system and is responsible for managing virtual machines and allocating virtualized hardware resources, such as CPU, memory, and network interfaces. It must ensure that each virtual machine operates as its own standalone computer, complete with its own OS, applications, and virtualized hardware resources and that it is isolated from the physical hardware resources of the host machine and other virtual machines running on the same host.

This enables multiple virtual machines to not only run on the same physical hardware, but also to be easily moved between physical hosts.

Containers

Although virtualization was first introduced through the concept of virtual machines, it has since evolved and been applied on many different levels, encompassing everything from individual applications to complete infrastructures, including Containers and Infrastructure as Code. Today, we are taking a closer look at the concept of a container.

Have you ever wondered how the term “container” emerged in the context of virtualization, and does it have anything to do with shipping? Actually, the comparison to shipping is quite apt! Just like standard containers are used for efficient shipping of goods, containers in the computer world provide a standardized and easily portable form of packaging applications.

Each time you want to install an application, you must always make sure that all the dependencies and environment settings are set up correctly. And let us be honest, things often don’t go smoothly, and instructions are rarely straightforward. Plus, if you want to run multiple programs on the same computer, the setup and configuration can get even trickier, with the risk of conflicting requirements. Now, imagine having to deploy software for a microservice architecture, which consists of dozens or hundreds of services, on a cloud infrastructure with multiple nodes and repeating the same installation on each node. It is time-consuming and frustrating.

Each time you want to install an application, you must always make sure that all the dependencies and environment settings are set up correctly. And let us be honest, things often don’t go smoothly, and instructions are rarely straightforward. Plus, if you want to run multiple programs on the same computer, the setup and configuration can get even trickier, with the risk of conflicting requirements. Now, imagine having to deploy software for a microservice architecture, which consists of dozens or hundreds of services, on a cloud infrastructure with multiple nodes and repeating the same installation on each node. It is time-consuming and frustrating.

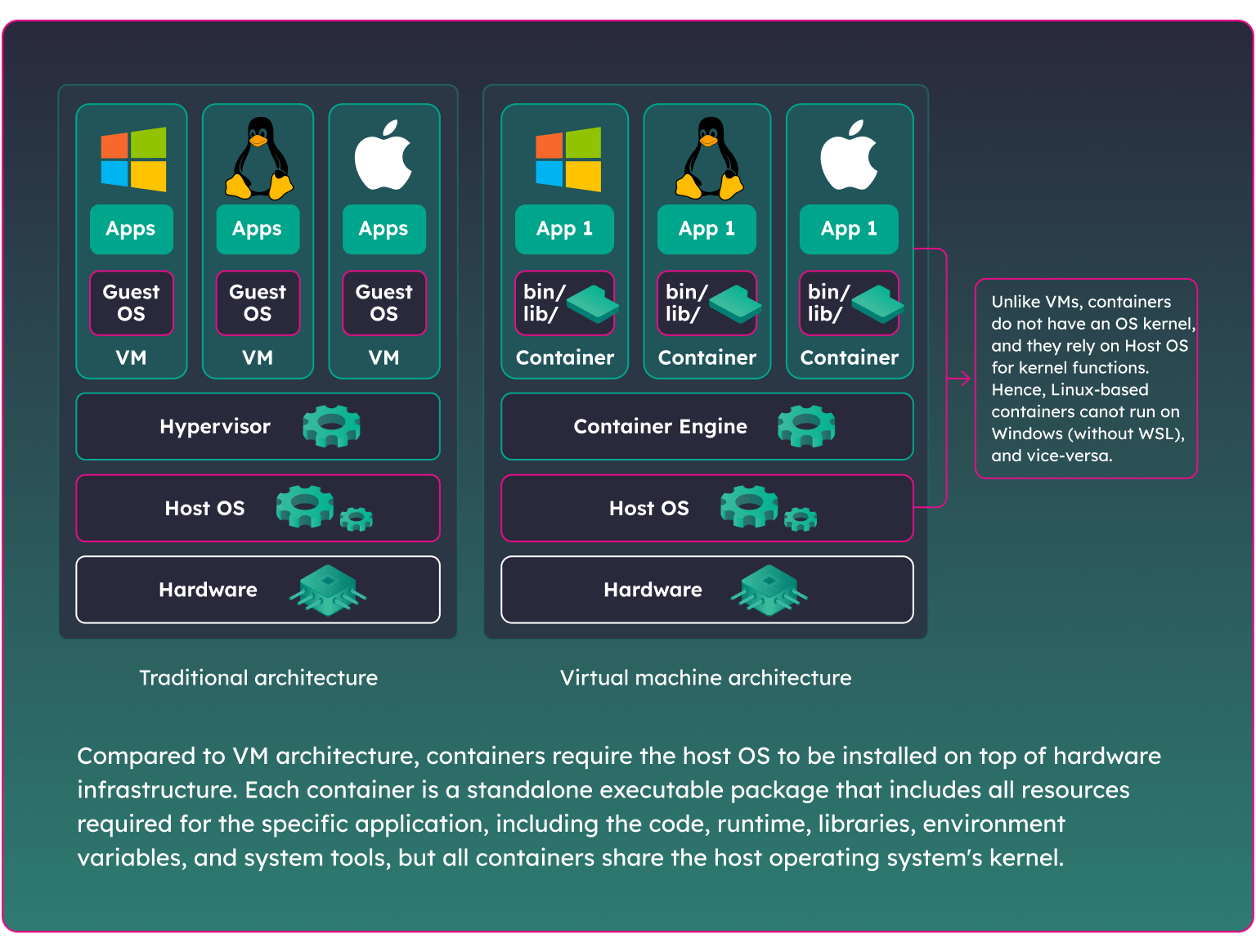

But what if you could package each service (application) in a virtual machine, configure it once, and then simply run copies of that virtual machine as many times as it is needed? Sure, that’s a great idea, but creating an entire virtual machine for just one application introduces too much overhead. Enter containers, a lighter-weight form of virtualization. Instead of abstracting the entire infrastructure, containers abstract just a part of the operating system, creating isolated environments with their own file system, binaries, libraries, and configuration files, but using the kernel of the host operating system. Due to this, there is a general distinction between Windows-based and Linux-based containers, and Linux-based containers cannot run on a Windows host, or vice-versa.

Compared to traditional virtual machines, containers require less memory space and are fast to start. They provide a consistent and reproducible way to package and deploy software, making it easy to run the same software on different environments. Using containers also improves the system’s stability and security, because containerized apps see only the files inside their own container, and can communicate only with other processes inside that container.

Next time …

Thank you for taking the time to read our blog post about virtualization and containers. We hope you found it helpful and informative. In our next blog post, we are going deeper into the world of containers, exploring topics such as container images, engines, runtime, and more. So, stay tuned!