Introduction

Scaling AI infrastructure might sound like a big, scary task—and, let’s be honest, it can be! As AI projects move from pilot phases to full-scale deployment, the need for scaling becomes critical. Scaling AI infrastructure can feel like juggling too many balls at once—developers and DevOps engineers need to expand computational power, manage growing datasets, and keep everything secure and compliant. Without a solid plan, what starts as an exciting opportunity can quickly turn into a frustrating bottleneck.

But before jumping into scaling, let’s make sure we’re on the same page with the essentials of AI infrastructure. If you’re still wrapping your head around those basics —we’ve covered them in our previous blog on AI infrastructure essentials.. It’s a good starting point to understand the foundation you need before thinking about scaling.

Now, let’s get back to today’s topic.

It’s no wonder scaling is such a challenge, given the massive growth we’re seeing in the AI space. The global AI infrastructure market is expected to skyrocket to around $421.44 billion by 2033, with an impressive annual growth rate of 27.53% starting in 2024. This kind of growth shows how crucial scaling AI infrastructure will be for staying ahead in the game. That’s why we’re going to break down the major challenges that come with AI infrastructure scaling and share some best practices to help guide you through the journey.

The Need for Scaling AI Infrastructure

Scaling AI infrastructure means creating an environment to grow alongside your AI project. It involves handling increased data volumes, more sophisticated models, and larger workloads without breaking a sweat. If your infrastructure isn’t ready to scale, even the best AI solutions can fall short, limiting both their effectiveness and business impact.

High-Performance Computing (HPC) and GPU processing are the engines that make AI run smoothly, enabling efficient training and inference for complex models. The growing numbers tell the story—cloud infrastructure spending is projected to grow by 26.1% this year, reaching $138.3 billion. With such explosive growth, leveraging cloud solutions for scalability is no longer just an option; it’s becoming essential. Whether cloud or hybrid cloud, finding the right approach is key to keeping up with the increasing demand for computational power.

Challenges in Scaling AI Infrastructure

Computational Challenges

When we talk about scaling AI infrastructure, computational power is at the core. GPU processing is essential for training AI models, but as workloads grow, relying solely on on-premises infrastructure can quickly become limiting. High-Performance Computing (HPC) can provide that extra horsepower, but let’s face it—it can be costly. Specialized hardware, high maintenance costs, and the challenge of scaling quickly can make HPC a tough choice for many organizations.

On the other hand, expanding on-premises GPU clusters might seem like a good idea for keeping control, but it lacks the flexibility that cloud solutions provide. When you’re dealing with large-scale models that require distributed training across multiple nodes, it’s crucial to think beyond just adding more GPUs—adopting distributed processing through cloud or hybrid solutions becomes vital.

Cloud Computing vs. Hybrid Cloud

Choosing between cloud computing and hybrid cloud can be tricky. Cloud computing offers scalability and cost efficiency but might not always meet data sovereignty and security requirements. Hybrid cloud combines the best of both worlds, letting you control sensitive data locally while leveraging the cloud’s scalability. However, let’s be real—managing a hybrid setup can be complex, especially for teams new to juggling both cloud and on-prem environments.

The projected growth in cloud infrastructure spending (26.1% growth by 2024) highlights the increasing shift towards cloud solutions for scalability. Shared cloud infrastructure spending alone is expected to increase by 30.4%, reaching $108.3 billion. This makes cloud and hybrid cloud setups a central part of future AI infrastructure strategies. That said, balancing costs and project needs is key to making sure scaling doesn’t become unnecessarily resource-intensive.

Data Scalability

Handling data scalability is another major hurdle when scaling AI infrastructure. AI projects thrive on data, but managing and scaling these datasets is no simple task. As projects grow, making sure data flows efficiently can be the difference between success and failure. Data lakes are great for storing huge amounts of raw data, while data warehousing helps structure that data so it’s ready for use in specific AI applications. Still, scaling data while maintaining quality and consistency can be challenging, and poor data management can directly impact model performance.

AI projects also deal with different types of data—structured, semi-structured, and unstructured. Integrating all of these seamlessly for training and inference requires robust data governance and efficient processing pipelines, which become increasingly complex as data volumes expand.

Operational Challenges

Scaling AI infrastructure is about much more than just adding hardware and storage; it’s also about keeping everything running smoothly as demands increase. This is where MLOps comes in. Think of MLOps as a way to bring DevOps principles into the machine learning world. It helps automate and streamline things, but scaling MLOps comes with its own set of challenges. You need solid version control, consistent deployment pipelines, and strong collaboration between data scientists, engineers, and DevOps teams. And let’s be honest—keeping everyone on the same page as infrastructure scales can be tough, which can lead to inconsistencies in model deployment.

Security Concerns

AI security is a growing concern as AI infrastructure scales. The more data and models you have, the bigger the attack surface becomes. Ensuring data privacy and complying with regulations like GDPR requires proactive measures, including encryption, regular audits, and strict access controls.

Another big issue is model integrity. Attacks like adversarial inputs can manipulate models, leading to incorrect or harmful predictions. As AI infrastructure scales, it’s critical to ensure model robustness through adversarial training and regular testing.

Best Practices for Scaling AI Infrastructure

Leverage High-Performance Computing (HPC) and GPU Clusters

Scaling computational resources is a must for AI projects. GPU clusters provide the power needed for training and inference, while HPC environments can handle massive AI workloads efficiently. Start small with GPU setups and expand as demand grows. Using orchestration tools to manage GPU workloads can help make sure resources are used effectively without unnecessary wastage.

Cloud Computing and Hybrid Cloud Strategies

Cloud computing offers flexibility and scalability without huge upfront investments. It’s cost-effective and lets you scale resources as needed. For projects involving sensitive data, a hybrid cloud setup can provide both control and scalability. For instance, processing sensitive information on-premises while using the cloud for model training allows for compliance and efficiency.

Choosing between cloud and hybrid cloud depends on your project requirements, cost considerations, and the need for data control. With cloud spending expected to keep rising, understanding how these solutions align with your needs is crucial.

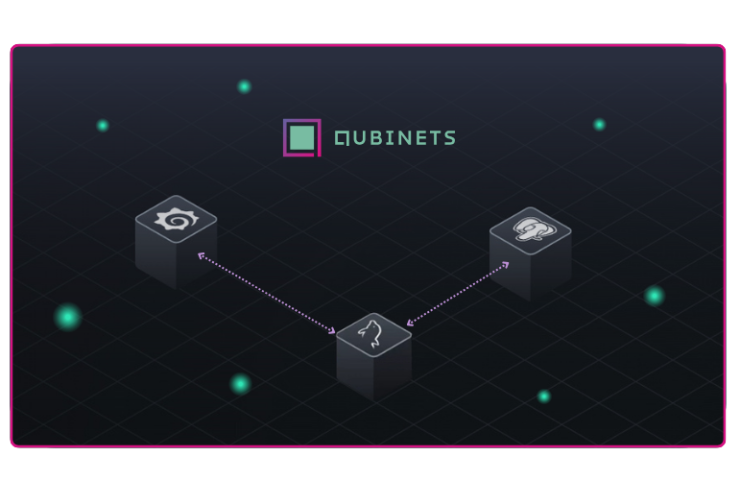

Implementing MLOps for Operational Efficiency

MLOps is all about making machine learning operational—ensuring that models move seamlessly from development to deployment. Automating model development, testing, and deployment helps maintain consistency and saves time, especially as infrastructure scales. Tools like Kubeflow and MLflow are popular choices that help keep everything running smoothly.

Implementing CI/CD pipelines is another best practice for staying agile. These pipelines automate the integration and deployment of new features, keeping models up-to-date and performing consistently, even as new data comes in.

Edge Computing for Low Latency

Scaling doesn’t always mean going bigger; sometimes, it means getting closer to where data is being generated. Edge computing brings AI capabilities to the edge, reducing latency and ensuring real-time decision-making. This is crucial for use cases like IoT devices and autonomous vehicles, where even the slightest delay can have major consequences.

By deploying AI at the edge, you reduce dependency on centralized resources, allowing faster insights. Imagine an autonomous vehicle making split-second decisions based on sensor inputs—that’s where edge computing really shines, making scalability more practical for latency-sensitive applications.

Containerization with Docker and Kubernetes

Containerization with Docker allows you to package AI models into a consistent environment, making deployment more predictable. When paired with Kubernetes, you can automate scaling based on demand. Kubernetes helps manage clusters of AI workloads, allocate resources efficiently, and ensure scaling happens seamlessly.

This approach also works well with a microservices architecture, where different parts of an AI application can be scaled independently. This modular setup makes scaling more flexible, allowing teams to adapt quickly to new demands.

Ensuring AI Security

Scaling AI infrastructure also means scaling your security efforts. Best practices include implementing strict access controls, encrypting data, and regularly monitoring your systems for vulnerabilities. Compliance with data privacy regulations becomes even more critical as you process more data.

Regular penetration testing, updating security protocols, and using automated threat detection tools help identify security gaps early, keeping your AI systems secure even as they expand..

Conclusion and final thoughts

Scaling AI infrastructure is challenging, but let’s be honest—if you’re serious about AI, it’s absolutely non-negotiable. Turning your AI projects from small experiments into impactful, production-ready systems is what makes all the difference in the real world. The truth is, if you don’t scale, you’ll get left behind, especially with how fast things are moving in this space.

The challenges are plenty—computational power, data handling, managing operations, and keeping everything secure. But if you’re ready to take it on, the tools are there. Using HPC, MLOps, cloud computing, containerization, and edge computing can make scaling not only feasible but actually rewarding. Just remember, those who adapt and scale their AI systems today are the ones who will lead tomorrow.

With careful planning and the right tools, scaling your AI infrastructure can be a manageable, even rewarding process. It’s about building an AI system that grows with your ambitions and meets the demands of a rapidly evolving industry.

If you’re looking for a solution that makes building and scaling your AI projects less of a headache, Qubinets is here to help. With a flexible approach to DevOps and DataOps, Qubinets integrates seamlessly with leading cloud providers and data centres, making it easier to deploy and scale resources on your preferred cloud or on-prem.

We have also natural integration with more than 25+ open source tools (AI/ML Ops, vector databases, storage, observability etc..) to help you build your AI project.

If you’re eager to learn more about building your AI product with Qubients don’t hesitate to reach out.