Businesses — Not Ready to Grasp the Generative AI

2023 was the breakthrough year for the generative AI — AI tools can now generate text, code, images and videos. Within a short period, ChatGPT has emerged as a leading exemplar of generative AI systems. The major application areas include marketing and customer service (chatbots and knowledge bases).

However, businesses are not ready to develop custom generative AI tools since they lack the proper data infrastructure and technology to ensure safe applications. Even in companies that have already applied AI to automate some tasks, CEOs are not sure what the benefits or risks are from it. To overcome this gap, we present here a practical approach: how to employ the right technology to create a stable, safe, and dynamic chatbot for your organization.

Implementing a Generative AI Chatbot over your own Knowledge Base

To implement a Chatbot — you need a Large language model (LLM) trained over your knowledge base and possibly over large textual data online. LLMs can understand questions asked by users and then generate correct, human-like responses. Businesses can utilize LLMs to create writing assistants in marketing, chatbots in customer service, summarize documents, etc.

You can also employ generative AI by adjusting an existing LLM to create your own dynamic chatbot. Retrieval Augmented Generation (RAG) can retrieve facts from the company’s knowledge base containing relevant business data. A powerful RAG-based AI Chatbot can be developed by augmenting the LLM with your domain-specific knowledge base. Some use cases include:

- Customer Service: You can empower your service representatives to quickly answer customer questions with precise, up-to-date information.

- Company’s Documentation Search: organizations have a broad knowledge across their premises, including technical documentation, support articles, company policies, and code repositories. Employees can query an internal search engine to retrieve information faster and more efficiently.

- Web-based Chatbot: Many businesses already implement AI chatbots on their websites — to empower basic customer relationships. Using RAG, companies can build a chatbot that is highly tailored to their business specifics. Thus, the questions about product specifications will be answered promptly.

To generate accurate and clear responses for your dynamic chatbot, you should augment the existing LLM with information retrieval (inside the knowledge base), which is described below. You will achieve true business value from LLMs by adding RAG to your open-source chatbot (e.g. Llama2).

RAG (Retrieval-Augmented Generation) Framework for LLMs (Large Language Models)

Generative AI and LLM-s can produce diverse content, but still — LLM’s generated text can be misleading due to its training data. If you ask the LLM to generate info about a recent event or trend, it won’t understand what you’re talking about, and the responses will be mixed at best and dubious at worst. LLMs’ problems arise from two key reasons:

- LLM training data is usually outdated (e.g. ChatGPT’s knowledge is gained by training over the entire Web, but up to a certain date. GPT-4 appears to be the most recent).

- When facts are missing, LLM-s make false but well-sounding statements to mask the gap in their knowledge (called hallucinations).

Retrieval Augmented Generation (RAG) is an approach that fixes both of these issues. It pairs information retrieval with a set of system prompts to direct LLMs on precise, up-to-date, and relevant information obtained from an external knowledge store. Providing the LLMs with this context knowledge will enable you to create domain-specific applications that require a deep and evolving understanding of facts, although LLM training data remains static.

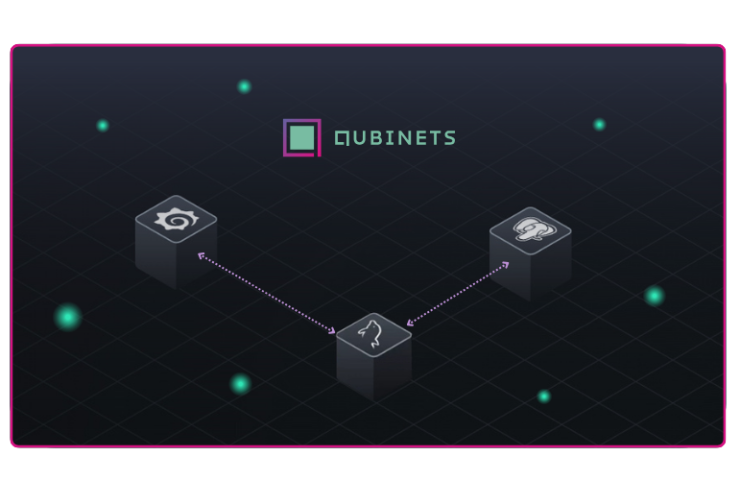

Only if the chatbot is combined with RAG it will understand user-specific information about recent events and will have knowledge of a subject. RAG architecture consists of 3 layers:

- Orchestration Layer — receives the user’s input with associated metadata (i.e. conversation history), interacts with retrieval tools, sends the prompt to the LLM, and returns the result. The orchestration layer is typically realized by tools like LangChain, Semantic Kernel, etc., with some native code (often Python) uniting it all together.

- Retrieval tools — a group of tools that return context and create responses to the user prompt. These tools combine both Knowledge bases and API-based retrieval systems.

- LLM — Large Language Model that you’re sending prompts to. It can be hosted by a third party (e.g. OpenAI) or run internally on your own infrastructure.

How can we help you — Qubinets for Developing RAG Chatbots

A chatbot will be effective if it provides precise replies, recommendations, or matching results. That is possible only by implementing similarity searches and matching over generated text from large-scale generative AI models.

Vector databases can provide high relevance and effectiveness for these use cases. The vector databases on Kubernetes can easily be deployed and managed using the Qubinets platform in just one step. Qubinets provides smooth DevOps and DataOps by connecting with popular vector databases allowing users to easily deploy and use vector databases on Kubernetes. Users just need to create a workload from the building blocks, search for database connection and deploy it to the cluster (e.g. Amazon EKS). It will take a short time (minutes) to create all the Kubernetes resources required for the vector database. Qubinets lets you create on-demand resources, deploy production-grade Kubernetes and streamline your cloud (Kubernetes) setup with top-tier open-source tools.

To implement a chatbot — the users should first connect their data sources (internal databases, CRMs, APIs) that will serve as the ground base for the chatbot. Qubinets enables data integration via secure access to multiple sources using stored blocks. Next — the connected data should be indexed in a high-performance vector database like Weaviate or Qdrant. Vector embeddings must be created to represent the data in a semantic vector space. At query time, the user’s input is also embedded into a vector. Data retrieval is realized using cosine similarity, which identifies the most relevant matching data vectors.

Next, the retrieved data should be incorporated into a prompt for the large language model. The LLM will integrate this context data to generate the best final response. The data will facilitate (augment) the generation of chatbot answers. Applying the right RAG infrastructure, the chatbot will generate correct, custom responses driven by your company’s knowledge base.

Qubinets works as a no-code, one-stop platform (set of tools) that enhances and simplifies dev-ops efforts towards data infrastructure management. At Qubinets, we manage the complexities of data infrastructure so your teams can prioritize creating impactful applications with confidence and clarity.

Feel free to contact us for a quick demo on how to implement your hassle-free data infrastructure.