Greetings fellow tech enthusiasts!

It’s wonderful to have you join us again as we continue our journey into the world of containers. In our last blog post, we laid the foundation for our understanding of containers and what they are all about.

Today, we’re going to take a closer look at some of the key mechanisms that make containers so powerful. From container images and engines to runtimes and use cases, we’ll delve deeper into the nitty-gritty details that make containers work.

So, grab a cup of coffee, and let’s dive in!

Container image

Previously, we established that a container is – a self-contained environment where an app runs with its own source code, dependencies, and virtualized OS resources like the kernel, all isolated from other containers and the host OS.

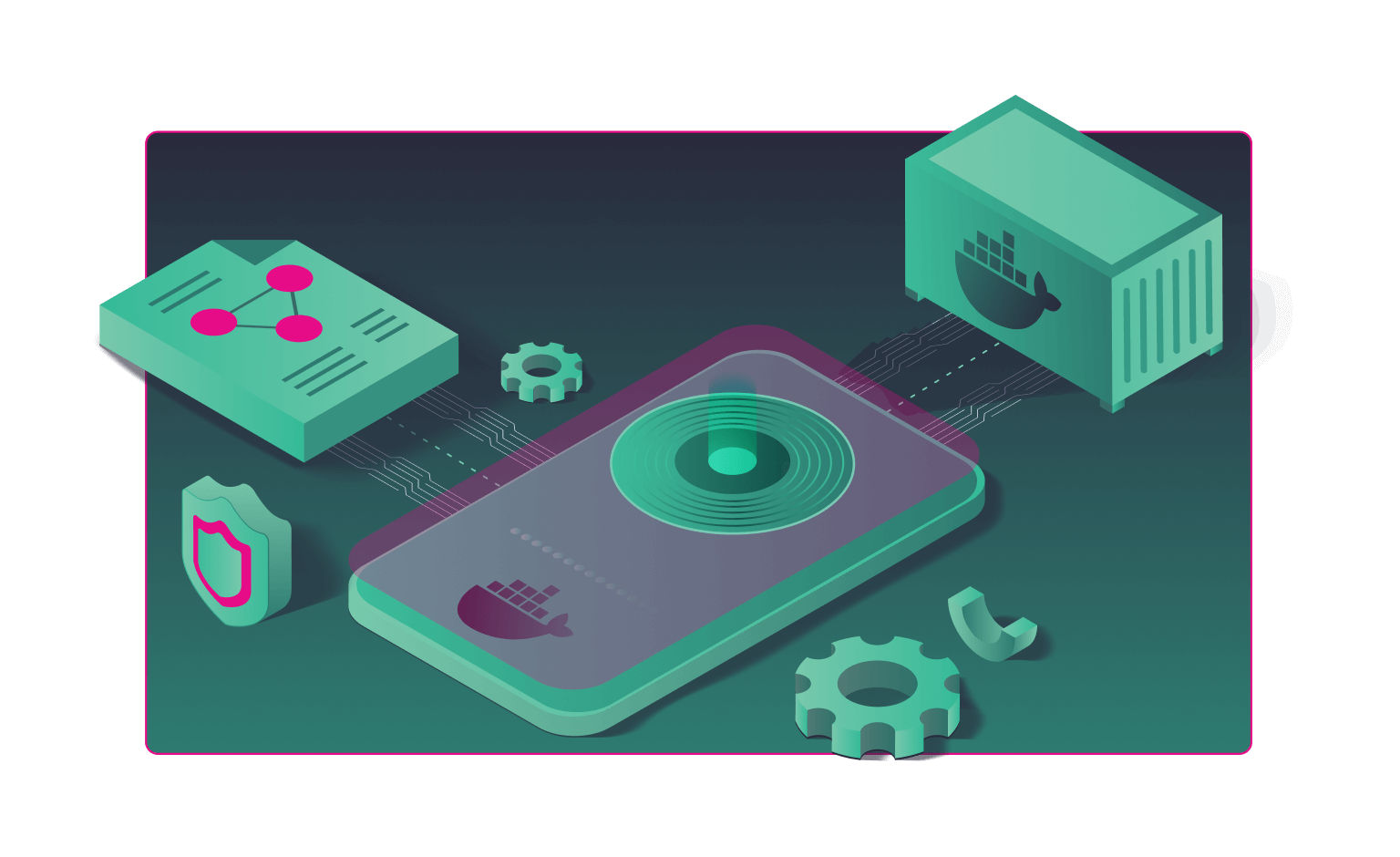

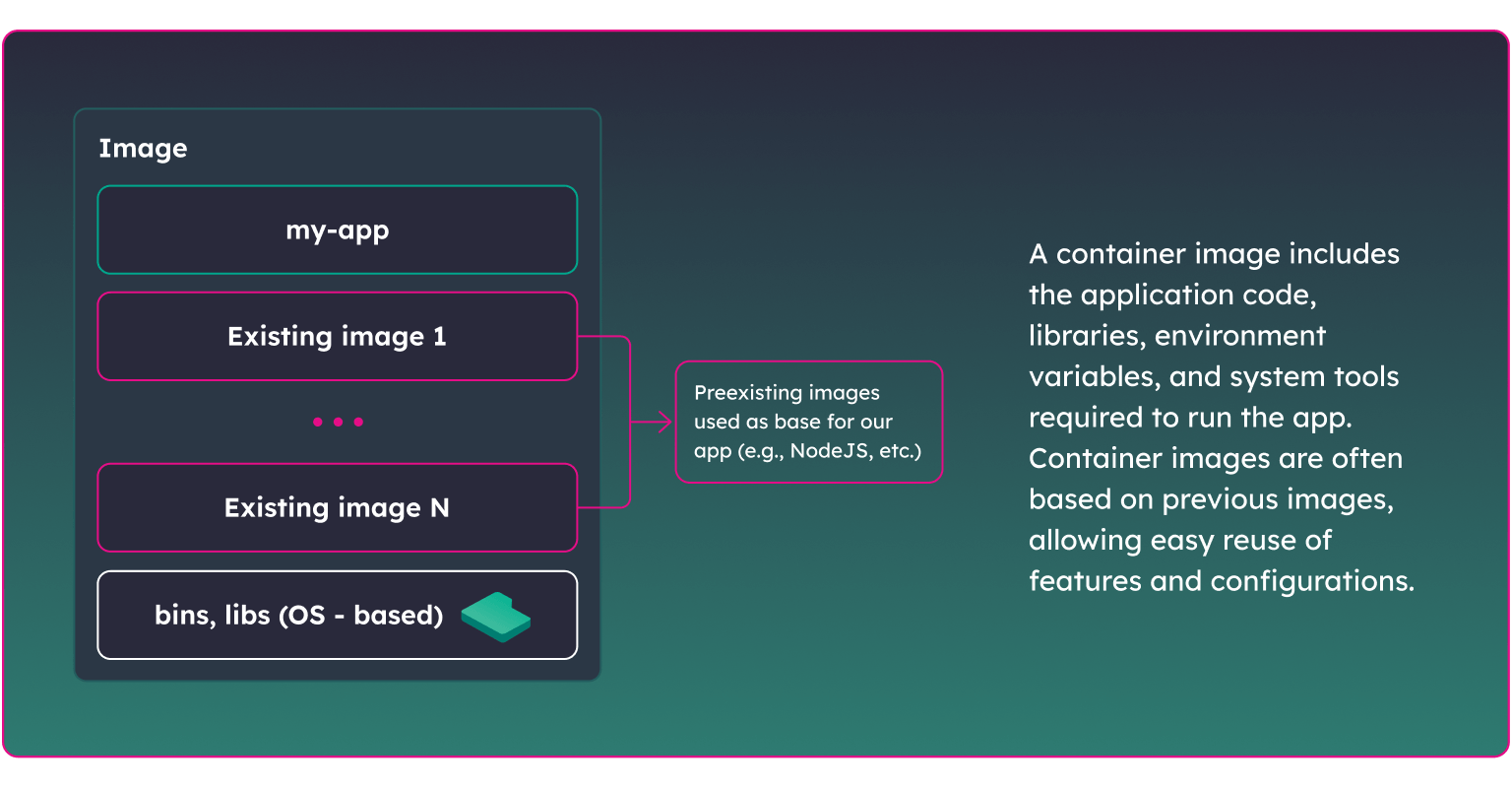

But how exactly do we create a container? Just like with a classic virtual machine, the first step is to create a container image – a blueprint for your container, containing all the necessary information and settings for your application to run smoothly.

A container image is a lightweight, stand-alone, and executable package of software that includes everything needed to run a piece of software, including the code, a runtime, libraries, environment variables, and system tools. It is created based on a set of instructions, known as a Dockerfile, that specifies the application’s environment and dependencies. Once the Dockerfile is ready, you can use it to build your image, which can then be run as a container on any host with Docker or other container engines installed.

As an illustrative example, consider creating a container image for a NodeJS server app on a Linux-based environment. The image would comprise your app source code, NodeJS runtime, libraries, dependencies, environment variables, and operating system tools (shell, system libraries, and utilities).

However, you don’t have to start from scratch when creating your image. One of the key benefits of containers, is that container images can be based on previous images, allowing you to layer and inherit features and configurations, and keep images as compact as possible. For example, you can build your image based on an existing NodeJS image that is built on the lightweight Linux Alpine distribution, which is commonly used as the base image for most container images due to its minimal set of required libraries and utilities for running applications in small footprint environments.

To summarize, the main goal of creating container images is to provide a consistent and reproducible way to package and deploy software, reducing complexity and risk in the deployment process. Container images are designed to be portable and easily distributable, making it possible to run the same software on different environments. They’re usually stored in private or public registries such as Docker Hub or Google Container Registry, making them readily available for distribution and deployment.

Containers in runtime

As you can see, a container image is a static package of files, but to make it do useful work, we need to run it, i.e., create a runtime environment. To do that, first we need to install a container engine on our system.

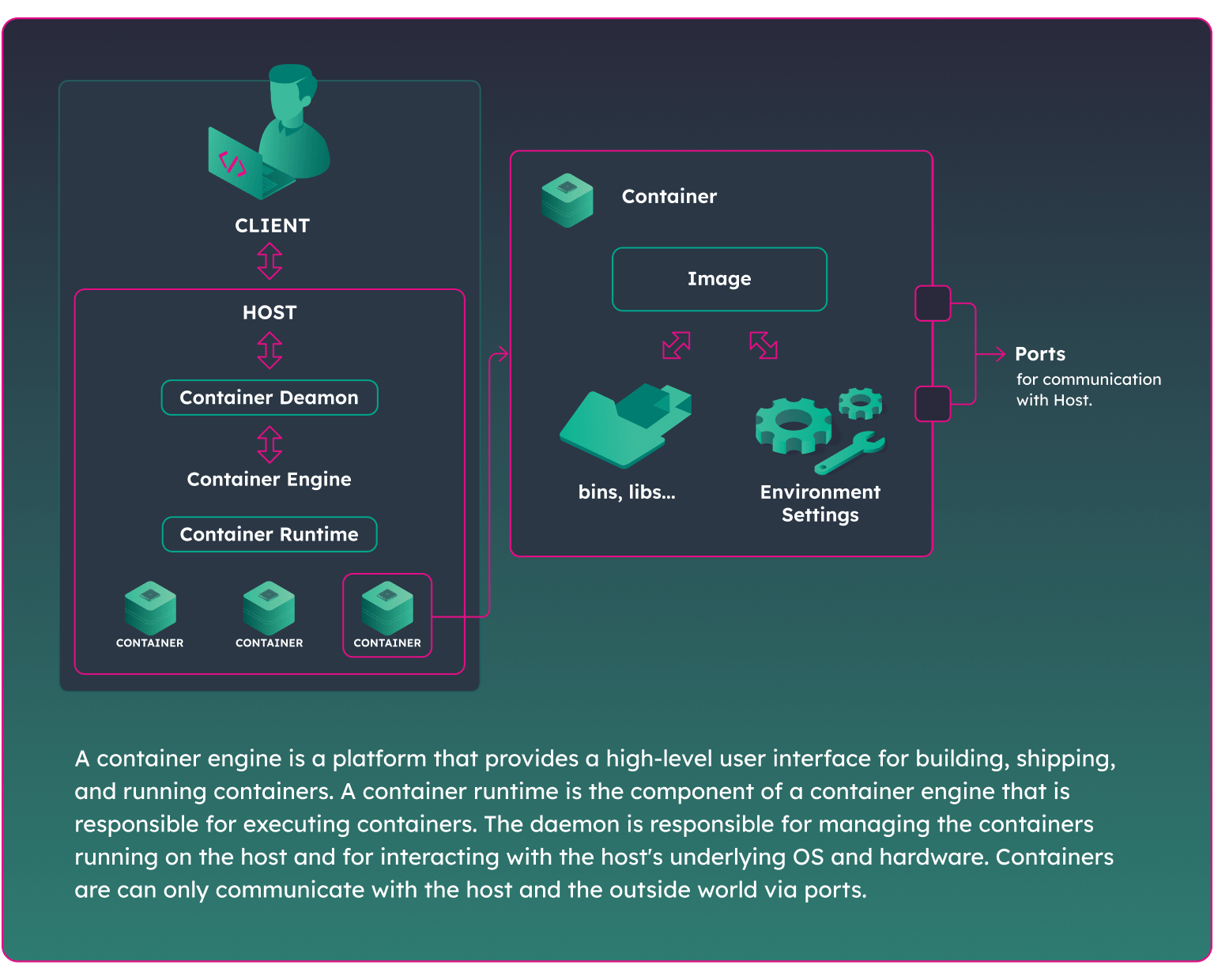

A container engine is a higher-level component (a platform) that provides a convenient interface for users to manage containers. It often includes a graphical user interface and abstracts away the underlying complexities of the container runtime, providing an easy-to- use interface for deploying and managing containers. Examples of container engines include Docker Engine, Kubernetes Engine, and Amazon Elastic Container Service (ECS).

A container runtime, on the other hand, is the low-level component responsible for executing containers. It provides the necessary functionalities for creating and managing containers, such as starting and stopping containers, handling container lifecycle, providing resource isolation and management, and connecting containers to networks and storage. Examples of container runtimes include containerd, rkt (rocket), and CRI-O.

When you launch a container from an image, it enters a running state and starts to perform the tasks it was designed to do. Each running container is an isolated environment that includes the following components: application code and dependencies, virtualized file system, environment variables, and configuration settings.

Another term often mentioned along with container runtime is a container daemon. It is a background process that runs on the host system and acts as a bridge between the host operating system and the containers. It communicates with the container runtime to perform various tasks related to container creation, management, and destruction. To put it simply, the container daemon is responsible for interfacing with the host operating system and the user, while the container runtime is responsible for interfacing with the containers themselves.

So, what exactly is Docker?

If you have explored the world of containers, you might have encountered that the term “Docker” is often used to mean both container image, container engine, and container runtime. So, let’s clarify things a bit.

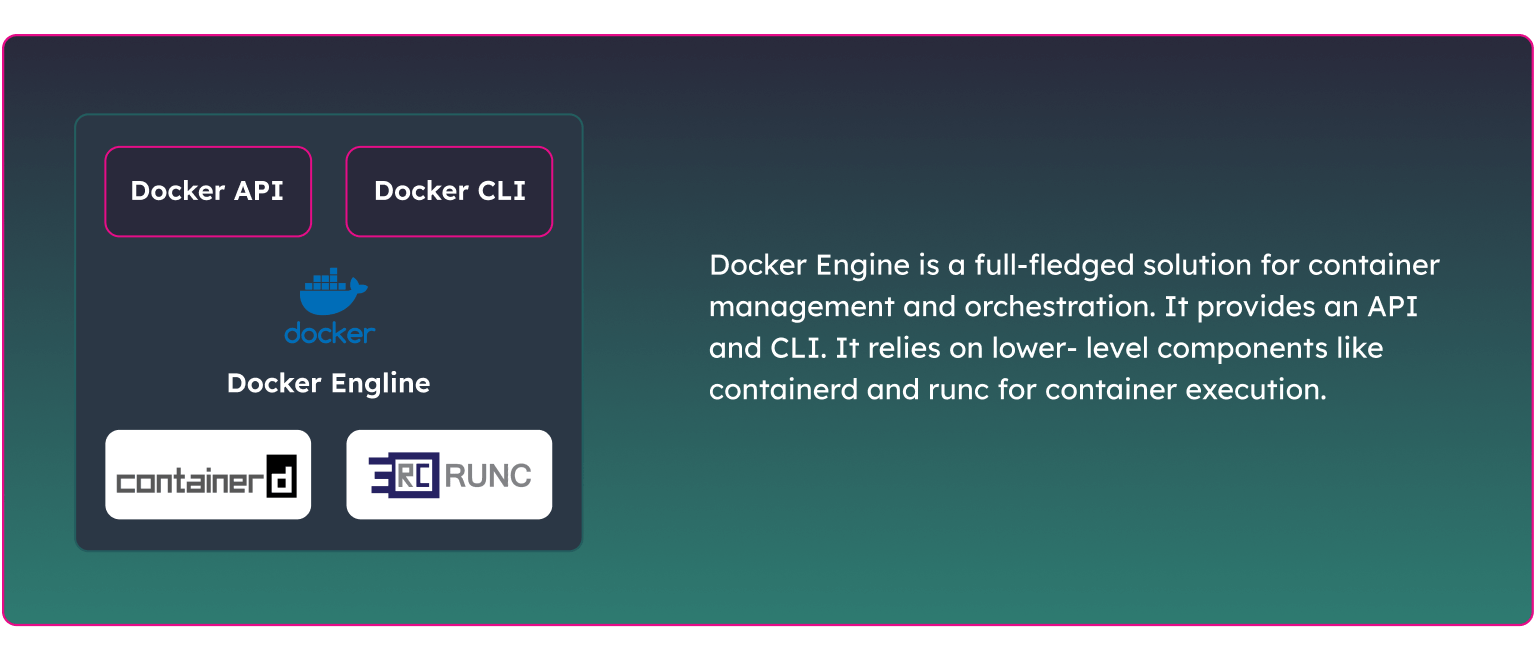

Docker is a complete platform for building, packaging, and deploying applications in containers. It provides a complete containerization solution, including the high-level container runtime (Docker Engine), a container image format (Docker Image), and a container registry (Docker Hub). Docker also provides a rich set of tools for managing containers, including a CLI and a graphical user interface.

However, you don’t need a full Docker Engine to run a Docker image. Instead, you can use any lower-level container runtime. A popular example is containerd, a container runtime that is designed to be more flexible and lightweight than Docker. It provides the core functionality for launching and managing containers, but without the additional features and overhead of a full platform like Docker. containerd is designed to be embedded in other systems, such as Kubernetes, to provide container management capabilities. In fact, starting from version 19.03, Docker Engine uses containerd as the default container runtime to allow for better integration with other tools and systems, as well as to reduce the complexity and size of the Docker engine. To go even deeper, containerd itself is a high-level container runtime and uses yet another lower-level runtime called runc to start a new process and set up the container’s environment.

Use cases

So, should you switch your classic deployment to containerized environment? Although containers solve many problems, they are not meant for every type of application. Here are some typical use cases when you should opt for containers:

1. Microservices Architecture – containers make it easier to break down monolithic applications into smaller, more manageable services.

2. Cloud-Native Applications – containers provide a standard for packaging and deploying applications in the cloud.

3. Continuous Integration and Deployment (CI/CD) – containers provide a reproducible and consistent environment for building, testing, and deploying software.

In such cases, containers provide many benefits regarding application portability, scalability, resource optimization, and disaster recovery.

However, containers may not be the best choice in the following situations:

· Legacy applications that have been built and designed to run on specific hardware configurations may not be suitable for containerization.

· Resource-intensive applications that require a large amount of resources such as memory, CPU, or storage may not run efficiently within a container and may be better suited for a traditional setup.

· Applications that require direct access to hardware components, such as GPU, may not be able to run within a container.

· Applications that require high security and handle sensitive information or require strict security protocols may not be suitable for containerization due to the potential security risks associated with sharing the host operating system kernel.

Also, another thing to bear in mind is that the use of containers can introduce additional complexity to an application, especially for developers who are new to the technology. The management and maintenance of containers can be time-consuming and require specialized skills, which can increase the cost of operation.

Next time …

So, that’s it for today! We appreciate your interest in our blog, and we hope it has helped accelerate your journey into the world of containers. Be sure to check out our next blog post where we’ll dive deeper into container orchestration and explore the benefits of Kubernetes. We look forward to sharing more insights with you soon!