Together with enormous data growth — the tough economic conditions and the demand for lowering the maintenance costs are making significant pressure on the companies. The main goal of data infrastructure management is to improve the service level, while providing flexible, scalable and risk-free services. IT departments are urged to intercept the following data-related challenges:

- Improving service availability and reducing the impact of unplanned outages/failures.

- Achieving business agility by making data and resources available in real-time.

- Reducing deployment times for new services (or updates), thus saving operational costs and achieving competitive advantage in the market.

- Reducing capital expenses with better management and adoption of new abstraction layers in the architecture.

- Maintain information assurance through consistent and robust security processes.

- Reducing power consumption (operational costs) and aligning with the “green” agenda.

Data Infrastructure Requirements

To increase the utilization of IT resources and maintain high performances, IT managers lean towards consolidated and virtualized data infrastructure:

- Usage of multiple virtual machines to bring better computing potential along with increased reliability and scalability.

- Efficiency can be achieved with appropriate management tools and infrastructure homogeneity.

- Flexibility means to utilize ‘meta models’ (frames, rules, and constraints on how to build infrastructure). The functional components of the platform should be independent rather than inter-dependent.

- Modularity means that the building blocks can support re-scale (up and down) heterogeneous workload requirements with common integration points (web-based APIs).

- Security — undertaking appropriate measures (systems, processes, protocols and tools) according to risk assessment determined by the threat model.

Data infrastructure brings data from the system that generates it to the user who needs it, while performing transformations and cleansing along the way. Data architecture building blocks include:

- Data collection — Data is generated on remote devices / applications, and accessed via API. To capture the data and transfer it to the next destination (without being dropped or duplicated) — Apache Kafka can be used.

- Ingestion — Collected data is moved to a storage layer (database or AWS S3) where it is prepared for analysis. At this point, data can be cataloged and profiled to provide statistics such as cardinality and missing values.

- Preparation — Data is aggregated, cleaned, and transformed in order to align it to company standards and make it available for further analysis. This step includes file format conversion, data compression and partitioning.

- Consumption — Prepared data is transferred to the production environment — analytics and visualization tools, operational data stores, decision engines, or user-facing applications.

Architecture Building Blocks

Nowadays, architecture building blocks are used in application development, application middleware, management tools, networking, computing, and storage. Architecture building blocks can be: hard, soft, and connector building blocks. Hard building blocks are a combination of software and hardware, further divided into system and application tier building blocks. Soft building blocks are software entities like services. Connector building blocks are the glue that connects all the components.

Most IT environments can be standardized using some or all of the architectural building blocks. For example, you could treat a database server as a resource tier building block. These database servers are designed and configured differently, managed by different groups of people, and maintained and tuned separately. Other tiers include: integration, business, presentation and client tier. This approach results in a significant management challenge and higher costs associated with deploying and maintaining these tiers.

To manage complex applications many companies decide to containerize their building blocks by using Kubernetes. Kubernetes offers many advantages for managing and deploying containerized applications. It provides scalability, easy application packaging, high availability, self-restore capabilities, resource optimization, service discovery, etc.. Having a large ecosystem and community support, Kubernetes is widely used for container orchestration and is adopted for modern app deployments. However, it faces issues with integration.

Although Kubernetes is a powerful tool for container orchestration, it lacks important components needed to run applications, such as observability, metrics, CI/CD, security, compliance, service mesh, storage, etc. To provide fast, controlled, and secure deployment and management of applications — IT companies need to adopt a self-service abstraction layer for developers. Companies which already have a Cloud or open-source environment — now try to embrace and effectively implement Infrastructure as Code (IaC) principles for automated provisioning and configuration management. Kubernetes is used for deployment or consuming a web service, and developers can bridge its gaps by having self-service tools to run and manage apps securely.

Dev teams and CTO-s agree that Kubernetes is a great tool, yet very complex. Plus, they need at least a dozen additional tools to automate the application lifecycle and to monitor, manage and secure the workloads. The choice to build a developer platform for Kubernetes or Cloud from scratch, or choose a ready-to-run solution — may become a tough decision. In this article, we present a way to simplify Dev-ops efforts and to foster data infrastructure automation.

A Way to Simplify DevOps efforts

Qubinets is a collaborative platform to build and manage data infrastructure constructed out of diverse open-source technologies, across multiple cloud providers. Qubinets works as a no-code, one-stop platform (set of tools) that enhances and simplifies dev-ops efforts towards data infrastructure management.

Building Big data and AI applications can be speed-up to 50% by applying Qubinets set of tools:

- Qubinets allows users to easily combine 40 different Open Source tools into their working architecture.

- Qubinets tools enable easier, AI-assisted, and visual user experience when building and operating data infrastructure.

- AI-assisted design to automate dimensioning, configuration, and deployment.

- It provides autonomous backend support to observe and resolve issues in real-time.

- Drag and drop UX to create, visualize and monitor complex big data and AI infrastructure.

Qubinets also offers a user-friendly UI that enables users to build complex data infrastructure using drag-and-drop feature. It also provides an easy way to connect multiple building blocks that will immediately upon deployment be able to work in unison.

How can we help you — Qubinets vs DevOps

Qubinets builds an ecosystem that increases adoption of open-source technologies by providing user-friendly tools and combining them into working data architectures. Some of the useful Qubinets functions include:

Development Environment — our proprietary solution architecture canvas view, which brings a new level of efficiency and introduces a more engaging building paradigm. It allows you to focus on what matters — how to build effective, stable and cost-optimized solutions for you or your clients.

Effortless Provisioning with a Click — Simplified data infrastructure operations to a single action. Qubinets lets you roll out on-demand resources and fire up production-grade Kubernetes faster than finishing your status update.

Automated Configuration and Deployment (CI/CD) — Qubinets streamlines your cloud and Kubernetes setup with top-tier open-source tools, slicing through complexity and supercharging project reliability with zero fuss.

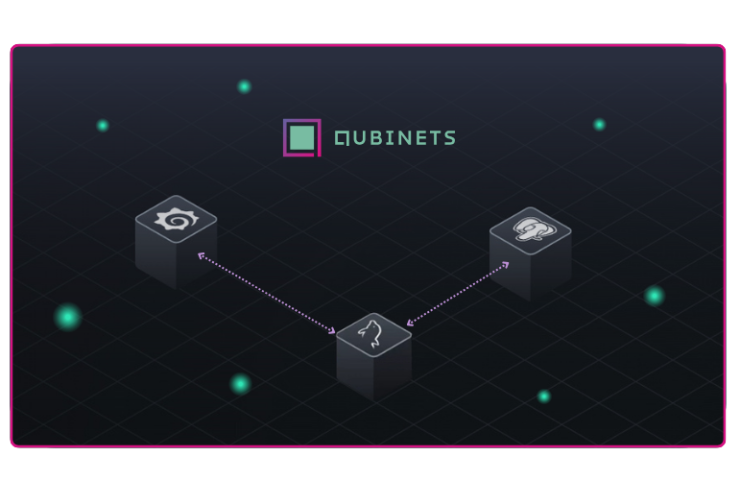

Observability and Log analysis — Qubinets is a log-streaming solution, therefore we choose Apache Kafka as our first Qub. Prometheus is used as an open-source monitoring tool. For data source, we use Log Generator Qub, and for data store implementations — Apache Druid Qub, ClickHouse Qub and OpenSearch Qub.

Metrics and Dashboards — Using Grafana within Qubinets platform as an observability tool for your apps and data infrastructure. Qubinets platform enables everyone, even if you have never seen a code in your life, to build and deploy Grafana in a matter of minutes. Just a few drag and drops and your Grafana is up and running.

At Qubinets, we manage the complexities of data infrastructure, so your teams can prioritize creating impactful applications with confidence and clarity. Feel free to contact us for a quick demo on how to implement your hassle-free data infrastructure.