AI agents are everywhere. Everyone is talking about them, and we’re just at the beginning of that “boom”.

They’re capable of doing any task you could imagine, from answering customer support tickets and processing financial transactions to even assisting doctors with diagnoses.

But while we’ve been busy making them faster and more intelligent, we haven’t talked enough about something just as important—how these AI agents should actually be managed and structured.

A recent research paper Infrastructure for AI Agents, tackles this exact issue. It makes a strong case that AI agents need clear governance, better control mechanisms, and solid operational strategies to function properly. Otherwise, we’re looking at a mess—inefficiencies, compliance nightmares, and decisions being made by AI systems without the proper checks and balances.

This isn’t just theory. AI agents are already influencing finance, healthcare, and legal systems in ways that affect real people. So, what does this paper tell us about the current state of AI agent deployment? And what should businesses and developers be thinking about before rolling out their own AI-powered solutions?

Let’s break it down.

Understanding Agent Infrastructure

AI agents don’t operate in a vacuum—they interact with people, businesses, and other digital systems. But without a structured framework governing these interactions, we risk inefficiencies, security vulnerabilities, and unintended consequences. That’s where agent infrastructure comes into play.

What Is Agent Infrastructure?

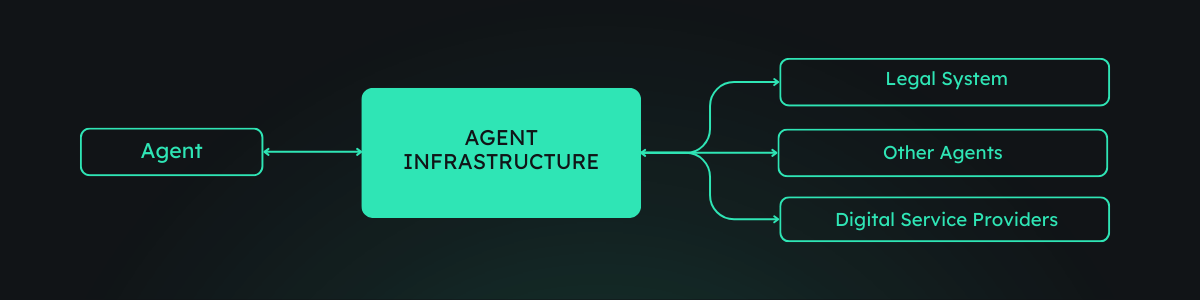

Agent infrastructure refers to the external systems and protocols that manage AI agents’ behavior, ensuring they operate in a controlled, accountable, and predictable manner. Unlike AI models, which focus on intelligence and task execution, agent infrastructure is about the rules, governance, and operational layers that dictate how AI agents engage with the world.

According to the paper, this infrastructure provides the foundation for attribution, interaction, and response mechanisms—essential elements that define how AI agents are identified, how they communicate, and how their actions can be monitored and corrected.

Think of it like an air traffic control system for AI. Without it, every AI agent would be flying blindly, leading to collisions, miscommunications, and systemic failures.

Why Does It Matter?

The growing deployment of AI agents in industries like finance, healthcare, and logistics makes infrastructure non-negotiable for several reasons:

- Accountability – If an AI agent executes a financial transaction or a medical recommendation, who is responsible? Infrastructure ensures AI actions can be traced back to a responsible party, whether a human operator or an organization.

- Safety & Compliance – AI agents operate in regulated environments. Infrastructure acts as the compliance layer, enforcing security policies, legal constraints, and industry standards.

- Interoperability – AI agents are increasingly integrated into broader ecosystems, from APIs to enterprise systems. Infrastructure establishes communication protocols that allow AI agents to function seamlessly within existing institutions.

Without robust agent infrastructure, AI deployments will remain fragmented, unpredictable, and, in some cases, dangerous. The next step is understanding how this infrastructure is built—through attribution, interaction, and response mechanisms, which we’ll explore next.

Key Functions of Agent Infrastructure

Agent infrastructure is not only a passive framework— instead, it actively shapes how AI agents operate, interact, and are held accountable. The paper breaks this down into three core functions: Attribution, Interaction, and Response. Each of these plays a crucial role in ensuring AI agents function effectively within society.

1. Attribution: Who’s Responsible for AI Actions?

Attribution refers to tying AI agents to real-world identities and responsibilities. If an AI agent executes an action—whether making a financial transaction or generating content—there needs to be a way to trace it back to an accountable entity, whether that’s a person, a company, or another AI system.

The paper highlights several key mechanisms that enable attribution:

- Identity Binding – Ensures an AI agent’s actions can be linked to a specific human or organization.

- Certification – Provides a way to verify an AI agent’s capabilities and compliance with regulations.

- Agent IDs – Unique identifiers that track agent activities and link them to accountability structures.

Without proper attribution, AI-generated actions could become a legal and ethical grey area, leading to security risks and regulatory challenges.

2. Interaction: How AI Agents Engage with Systems and Each Other

AI agents aren’t isolated; they interact with users, businesses, and even other AI agents. Infrastructure plays a key role in defining these interactions to prevent misuse, inefficiencies, or unintended consequences.

The paper identifies several critical components:

- Agent Channels – Secure, structured pathways that allow AI agents to interact with digital services.

- Oversight Layers – Mechanisms that enable human or automated supervision over AI actions.

- Inter-Agent Communication – Protocols that allow AI agents to interact and collaborate while maintaining security and efficiency.

- Commitment Devices – Tools that enforce agreements between AI agents, ensuring reliability in automated transactions.

Without structured interaction mechanisms, AI agents could cause disruptions—misinterpreting commands, miscommunicating with other systems, or even engaging in harmful activities.

3. Response: What Happens When AI Agents Go Wrong?

Even with proper attribution and interaction mechanisms, AI agents will inevitably make mistakes, be exploited, or act in ways that require intervention. The response function ensures that when something goes wrong, there’s a way to detect, report, and correct AI-driven actions.

Key response mechanisms include:

- Incident Reporting – Systems that detect harmful or unintended agent actions and report them to relevant authorities.

- Rollbacks & Corrective Actions – Infrastructure that allows organizations to reverse, modify, or mitigate harmful AI-driven outcomes.

For example, if an AI agent incorrectly processes a financial transaction or generates misleading medical advice, response mechanisms should detect the issue and trigger corrective actions.

Why These Functions Matter?

Attribution, interaction, and response are the foundation of safe and reliable AI agent deployment. Without them, AI-driven systems would be vulnerable to:

- Lack of accountability – making it impossible to determine responsibility for AI-driven actions.

- Unregulated interactions – leading to inefficiencies, security risks, or unintended consequences.

- No recourse for errors – leaving businesses and users exposed to financial, legal, or reputational damage.

As AI agents continue to expand into high-stakes industries, building robust infrastructure is the only way to ensure they operate within ethical and operational boundaries.

Challenges and Open Questions

Building a robust agent infrastructure means overcoming technical, ethical, and regulatory hurdles. The paper highlights several key challenges that must be addressed before AI agents can operate safely and efficiently at scale.

Attribution Is Easier Said Than Done

While identity binding and agent IDs sound like straightforward solutions, implementing them raises major challenges:

- Privacy Concerns – How do we track AI agent actions without violating user privacy?

- Decentralized Agents – Many AI agents operate across multiple platforms and organizations. Who owns the attribution data? Who enforces accountability?

- False Attribution Risks – What happens if an AI agent is hijacked or manipulated? How do we prevent misattributions that could penalize the wrong entity?

Without clear attribution mechanisms, AI-generated content, financial transactions, and automated decisions could become untraceable and unaccountable.

Interaction Needs Standardization

Different companies are developing AI agents using different architectures for different purposes. Without interoperability, they won’t be able to interact effectively, leading to:

- Fragmented ecosystems – AI agents built by different companies may struggle to communicate.

- Security vulnerabilities – Weak oversight layers could lead to AI agents engaging in unintended or even malicious interactions.

- Ethical conflicts – Should all AI agents be allowed to interact with each other? If not, who decides which interactions are permissible?

Developing global standards and protocols for AI agent interactions will be a massive challenge—one that requires collaboration between governments, researchers, and industry leaders.

How Do We Correct AI Agent Mistakes?

The response function is critical—but current AI systems lack effective rollback mechanisms. Some key questions remain unanswered:

- What level of control should humans have over AI agent actions?

- How do we ensure rollback mechanisms don’t get abused? (e.g., bad actors using them to erase evidence of AI misbehavior)

- Who decides what counts as “harmful” AI behavior? Different stakeholders may have conflicting views on what an AI should or shouldn’t be allowed to do.

Until we develop scalable, unbiased, and transparent response mechanisms, the risk of AI agents making irreversible errors will remain high.

Regulatory Uncertainty Slows Progress

AI governance is still in its early days, and no universal legal framework exists for managing AI agents at scale. This creates several obstacles:

- Jurisdictional conflicts – Different countries have different AI laws. How do we manage cross-border AI agent interactions?

- Liability questions – If an AI agent makes a bad financial trade or a wrong medical recommendation, who is responsible? The developer? The business using it? The AI itself?

- Lack of enforcement mechanisms – Even if AI regulations exist, who enforces them? Governments? Industry groups? Self-regulation?

Without clear regulatory frameworks, businesses and developers risk legal uncertainty every time they deploy AI agents.

The Road Ahead: Can We Solve These Challenges?

The paper makes one thing clear: we’re still in the early days of agent infrastructure development. While we know what needs to be done, we don’t yet have all the answers.

Solving these challenges will require:

- Stronger collaboration between AI developers, regulators, and policymakers.

- New technical solutions that balance privacy, accountability, and security.

- Standardized protocols to ensure AI agents can interact safely across different environments.

If we get this right, AI agents will be able to operate more efficiently, safely, and ethically. But if we don’t, we’re looking at a future filled with unregulated, unaccountable, and potentially dangerous AI-driven systems.

The Future of AI Agents Depends on Infrastructure

AI agents are no longer futuristic—they’re already shaping industries, from finance and healthcare to e-commerce and legal automation. But as we’ve seen throughout this discussion, building powerful AI agents isn’t enough. Without the right infrastructure, we risk creating systems that are unaccountable, insecure, and unpredictable.

The research paper makes it clear: AI agents need structured external systems to function safely and effectively. Attribution ensures responsibility, interaction establishes secure communication, and response mechanisms prevent unintended consequences. Without these, AI agents become liabilities rather than assets.

And now we’ve finally come up with the solution…Qubinets

This is where we offer a real-world solution. Instead of leaving businesses to figure out AI agent infrastructure from scratch, Qubinets provides a self-hosted environment where companies can deploy AI agents with full control over governance, security, and operational stability.

How Qubinets Solves the AI Infrastructure Challenge

✅ Accountability (Attribution) – By allowing businesses to self-host AI agents, Qubinets ensures that actions are always tied to the organization’s own cloud or data centre, eliminating the risks of third-party dependencies and ambiguous responsibility.

✅ Seamless Operations (Interaction) – AI agents need to interact securely with databases, APIs, and other systems. Qubinets provides pre-built integrations and standard communication protocols, ensuring that AI agents function reliably across enterprise environments.

✅ Oversight & Control (Response) – Mistakes happen, and AI agents need rollback and monitoring mechanisms. Since Qubinets keeps everything inside the user’s infrastructure, companies can track, audit, and correct AI agent actions in real-time—before they cause operational or compliance issues.

Businesses deploying AI-powered solutions need to ask themselves:

- Who controls and monitors our AI agents?

- What happens when an AI agent makes a wrong decision?

- Are we exposing ourselves to legal and compliance risks?

- How do we prevent unintended interactions between AI agents and enterprise systems?

For companies serious about AI, ignoring infrastructure is no longer an option. Solutions like Qubinets help businesses deploy AI agents responsibly, providing the technical foundation necessary for scaling AI safely.

That’s the future of AI, and it starts with infrastructure that actually works.